6.5 Triangle Meshes

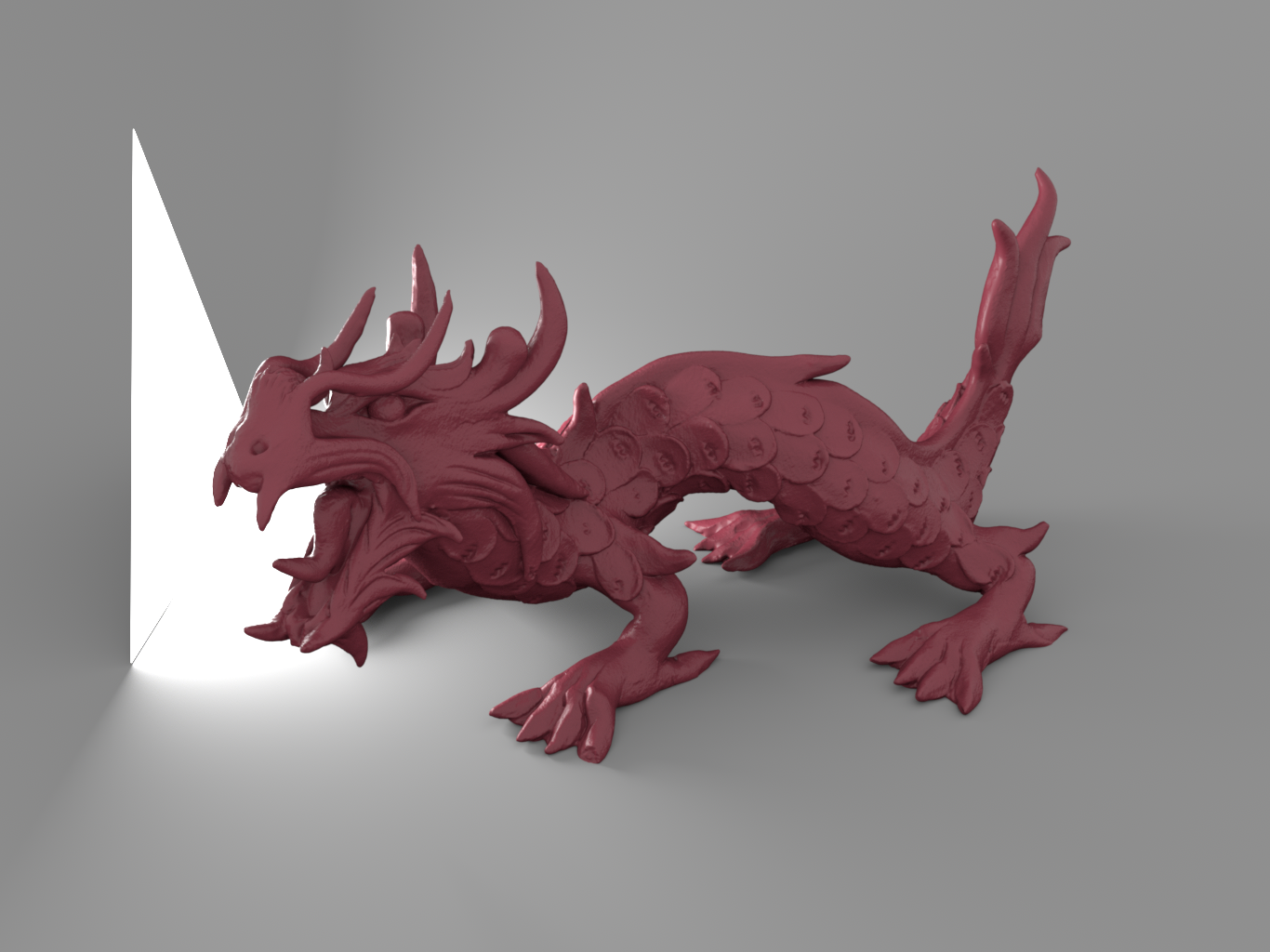

The triangle is one of the most commonly used shapes in computer graphics; complex scenes may be modeled using millions of triangles to achieve great detail. (Figure 6.11 shows an image of a complex triangle mesh of over four million triangles.)

While a natural representation would be to have a Triangle shape implementation where each triangle stored the positions of its three vertices, a more memory-efficient representation is to separately store entire triangle meshes with an array of vertex positions where each individual triangle just stores three offsets into this array for its three vertices. To see why this is the case, consider the celebrated Euler–Poincaré formula, which relates the number of vertices , edges , and faces on closed discrete meshes as

where is the genus of the mesh. The genus is usually a small number and can be interpreted as the number of “handles” in the mesh (analogous to a handle of a teacup). On a triangle mesh, the number of edges and vertices is furthermore related by the identity

This can be seen by dividing each edge into two parts associated with the two adjacent triangles. There are such half-edges, and all colocated pairs constitute the mesh edges. For large closed triangle meshes, the overall effect of the genus usually becomes negligible and we can combine the previous two equations (with ) to obtain

In other words, there are approximately twice as many faces as vertices. Since each face references three vertices, every vertex is (on average) referenced a total of six times. Thus, when vertices are shared, the total amortized storage required per triangle will be 12 bytes of memory for the offsets (at 4 bytes for three 32-bit integer offsets) plus half of the storage for one vertex—6 bytes, assuming three 4-byte floats are used to store the vertex position—for a total of 18 bytes per triangle. This is much better than the 36 bytes per triangle that storing the three positions directly would require. The relative storage savings are even better when there are per-vertex surface normals or texture coordinates in a mesh.

6.5.1 Mesh Representation and Storage

pbrt uses the TriangleMesh class to store the shared information about a triangle mesh. It is defined in the files util/mesh.h and util/mesh.cpp.

In addition to the mesh vertex positions and vertex indices, per-vertex normals n, tangent vectors s, and texture coordinates uv may be provided. The corresponding vectors should be empty if there are no such values or should be the same size as p otherwise.

The mesh data is made available via public member variables; as with things like coordinates of points or rays’ directions, there would be little benefit and some bother from information hiding in this case.

Although its constructor takes std::vector parameters, TriangleMesh stores plain pointers to its data arrays. The vertexIndices pointer points to 3 * nTriangles values, and the per-vertex pointers, if not nullptr, point to nVertices values.

We chose this design so that different TriangleMeshes could potentially point to the same arrays in memory in the case that they were both given the same values for some or all of their parameters. Although pbrt offers capabilities for object instancing, where multiple copies of the same geometry can be placed in the scene with different transformation matrices (e.g., via the TransformedPrimitive that is described in Section 7.1.2), the scenes provided to it do not always make full use of this capability. For example, with the landscape scene in Figures 5.11 and 7.2, over 400 MB is saved from detecting such redundant arrays.

The BufferCache class handles the details of storing a single unique copy of each buffer provided to it. Its LookupOrAdd() method, to be defined shortly, takes a std::vector of the type it manages and returns a pointer to memory that stores the same values.

The BufferCaches are made available through global variables in the pbrt namespace. Additional ones, not included here, handle normals, tangent vectors, and texture coordinates.

The BufferCache class is templated based on the array element type that it stores.

BufferCache allows concurrent use by multiple threads so that multiple meshes can be added to the scene in parallel; the scene construction code in Appendix C takes advantage of this capability. While a single mutex could be used to manage access to it, contention over that mutex by multiple threads can inhibit concurrency, reducing the benefits of multi-threading. Therefore, the cache is broken into 64 independent shards, each holding a subset of the entries. Each shard has its own mutex, allowing different threads to concurrently access different shards.

Buffer is a small helper class that wraps an allocation managed by the BufferCache.

The Buffer constructor computes the buffer’s hash, which is stored in a member variable.

An equality operator, which is required by the std::unordered_set, only returns true if both buffers are the same size and store the same values.

BufferHasher is another helper class, used by std::unordered_set. It returns the buffer’s already-computed hash.

The BufferCache LookUpOrAdd() method checks to see if the values stored by the provided buffer are already in the cache and returns a pointer to them if so. Otherwise, it allocates memory to store them and returns a pointer to it.

The pstd::span’s contents need to be wrapped in a Buffer instance to be able to search for a matching buffer in the cache. The buffer’s pointer is returned if it is already present. Because the cache is only read here and is not being modified, the lock_shared() capability of std::shared_mutex is used here, allowing multiple threads to read the hash table concurrently.

Otherwise, memory is allocated using the allocator to store the buffer, and the values are copied from the provided span before the Buffer is added to the cache. An exclusive lock to the mutex must be held in order to modify the cache; one is acquired by giving up the shared lock and then calling the regular lock() method.

It is possible that another thread may have added the buffer to the cache before the current thread is able to; if the same buffer is being added by multiple threads concurrently, then one will end up acquiring the exclusive lock before the other. In that rare case, a pointer to the already-added buffer is returned and the memory allocated by this thread is released.

Returning now to the TriangleMesh constructor, the vertex positions are processed next. Unlike the other shapes that leave the shape description in object space and then transform incoming rays from rendering space to object space, triangle meshes transform the shape into rendering space and thus save the work of transforming incoming rays into object space and the work of transforming the intersection’s geometric representation out to rendering space. This is a good idea because this operation can be performed once at startup, avoiding transforming rays many times during rendering. Using this approach with quadrics is more complicated, although possible—see Exercise 6.8.8 at the end of the chapter.

The resulting points are also provided to the buffer cache, though after the rendering from object transformation has been applied. Because the positions were transformed to rendering space, this cache lookup is rarely successful. The hit rate would likely be higher if positions were left in object space, though doing so would require additional computation to transform vertex positions when they were accessed. Vertex indices and uv texture coordinates fare better with the buffer cache, however.

We will omit the remainder of the TriangleMesh constructor, as handling the other per-vertex buffer types is similar to how the positions are processed. The remainder of its member variables are below. In addition to the remainder of the mesh vertex and face data, the TriangleMesh records whether the normals should be flipped by way of the values of reverseOrientation and transformSwapsHandedness. Because these two have the same value for all triangles in a mesh, memory can be saved by storing them once with the mesh itself rather than redundantly with each of the triangles.

6.5.2 Triangle Class

The Triangle class actually implements the Shape interface. It represents a single triangle.

Because complex scenes may have billions of triangles, it is important to minimize the amount of memory that each triangle uses. pbrt stores pointers to all the TriangleMeshes for the scene in a vector, which allows each triangle to be represented using just two integers: one to record which mesh it is a part of and another to record which triangle in the mesh it represents. With 4-byte ints, each Triangle uses just 8 bytes of memory.

Given this compact representation of triangles, recall the discussion in Section 1.5.7 about the memory cost of classes with virtual functions: if Triangle inherited from an abstract Shape base class that defined pure virtual functions, the virtual function pointer with each Triangle alone would double its size, assuming a 64-bit architecture with 8-byte pointers.

The bounding box of a triangle is easily found by computing a bounding box that encompasses its three vertices. Because the vertices have already been transformed to rendering space, no transformation of the bounds is necessary.

Finding the positions of the three triangle vertices requires some indirection: first the mesh pointer must be found; then the indices of the three triangle vertices can be found given the triangle’s index in the mesh; finally, the positions can be read from the mesh’s p array. We will reuse this fragment repeatedly in the following, as the vertex positions are needed in many of the Triangle methods.

The GetMesh() method encapsulates the indexing operation to get the mesh’s pointer.

Using the fact that the area of a parallelogram is given by the length of the cross product of the two vectors along its sides, the Area() method computes the triangle area as half the area of the parallelogram formed by two of its edge vectors (see Figure 6.13).

Bounding the triangle’s normal should be trivial: a cross product of appropriate edges gives its single normal vector direction. However, two subtleties that affect the orientation of the normal must be handled before the bounds are returned.

The first issue with the returned normal comes from the presence of per-vertex normals, even though it is a bound on geometric normals that NormalBounds() is supposed to return. pbrt requires that both the geometric normal and the interpolated per-vertex normal lie on the same side of the surface. If the two of them are on different sides, then pbrt follows the convention that the geometric normal is the one that should be flipped.

Furthermore, if there are not per-vertex normals, then—as with earlier shapes—the normal is flipped if either ReverseOrientation was specified in the scene description or the rendering to object transformation swaps the coordinate system handedness, but not both. Both of these considerations must be accounted for in the normal returned for the normal bounds.

Although it is not required by the Shape interface, we will find it useful to be able to compute the solid angle that a triangle subtends from a reference point. The previously defined SphericalTriangleArea() function takes care of this directly.

6.5.3 Ray–Triangle Intersection

Unlike the other shapes so far, pbrt provides a stand-alone triangle intersection function that takes a ray and the three triangle vertices directly. Having this functionality available without needing to instantiate both a Triangle and a TriangleMesh in order to do a ray–triangle intersection test is helpful in a few other parts of the system. The Triangle class intersection methods, described next, use this function in their implementations.

pbrt’s ray–triangle intersection test is based on first computing an affine transformation that transforms the ray such that its origin is at in the transformed coordinate system and such that its direction is along the axis. Triangle vertices are also transformed into this coordinate system before the intersection test is performed. In the following, we will see that applying this coordinate system transformation simplifies the intersection test logic since, for example, the and coordinates of any intersection point must be zero. Later, in Section 6.8.4, we will see that this transformation makes it possible to have a watertight ray–triangle intersection algorithm, such that intersections with tricky rays like those that hit the triangle right on the edge are never incorrectly reported as misses.

One side effect of the transformation that we will apply to the vertices is that, due to floating-point round-off error, a degenerate triangle may be transformed into a non-degenerate triangle. If an intersection is reported with a degenerate triangle, then later code that tries to compute the geometric properties of the intersection will be unable to compute valid results. Therefore, this function starts with testing for a degenerate triangle and returning immediately if one was provided.

There are three steps to computing the transformation from rendering space to the ray–triangle intersection coordinate space: a translation , a coordinate permutation , and a shear . Rather than computing explicit transformation matrices for each of these and then computing an aggregate transformation matrix to transform vertices to the coordinate space, the following implementation applies each step of the transformation directly, which ends up being a more efficient approach.

The translation that places the ray origin at the origin of the coordinate system is:

This transformation does not need to be explicitly applied to the ray origin, but we will apply it to the three triangle vertices.

Next, the three dimensions of the space are permuted so that the dimension is the one where the absolute value of the ray’s direction is largest. The and dimensions are arbitrarily assigned to the other two dimensions. This step ensures that if, for example, the original ray’s direction is zero, then a dimension with nonzero magnitude is mapped to .

For example, if the ray’s direction had the largest magnitude in , the permutation would be:

As before, it is easiest to permute the dimensions of the ray direction and the translated triangle vertices directly.

Finally, a shear transformation aligns the ray direction with the axis:

To see how this transformation works, consider its operation on the ray direction vector .

For now, only the and dimensions are sheared; we can wait and shear the dimension only if the ray intersects the triangle.

Note that the calculations for the coordinate permutation and the shear coefficients only depend on the given ray; they are independent of the triangle. In a high-performance ray tracer, it may be worthwhile to compute these values once and store them in the Ray class, rather than recomputing them for each triangle the ray is intersected with.

With the triangle vertices transformed to this coordinate system, our task now is to find whether the ray starting from the origin and traveling along the axis intersects the transformed triangle. Because of the way the coordinate system was constructed, this problem is equivalent to the 2D problem of determining if the , coordinates are inside the projection of the triangle (Figure 6.12).

To understand how the intersection algorithm works, first recall from Figure 3.6 that the length of the cross product of two vectors gives the area of the parallelogram that they define. In 2D, with vectors and , the area is

Half of this area is the area of the triangle that they define. Thus, we can see that in 2D, the area of a triangle with vertices , , and is

Figure 6.13 visualizes this idea geometrically.

We will use this expression of triangle area to define a signed edge function: given two triangle vertices and , we can define the directed edge function as the function that gives twice the area of the triangle given by , , and a given third point :

(See Figure 6.14.)

The edge function gives a positive value for points to the right of the line, and a negative value for points to the left. Thus, if a point has edge function values of the same sign for all three edges of a triangle, it must be on the same side of all three edges and thus must be inside the triangle.

Thanks to the coordinate system transformation, the point that we are testing has coordinates . This simplifies the edge function expressions. For example, for the edge from to , we have:

In the following, we will use the indexing scheme that the edge function corresponds to the directed edge from vertex to .

In the rare case that any of the edge function values is exactly zero, it is not possible to be sure if the ray hits the triangle or not, and the edge equations are reevaluated using double-precision floating-point arithmetic. (Section 6.8.4 discusses the need for this step in more detail.) The fragment that implements this computation, <<Fall back to double-precision test at triangle edges>>, is just a reimplementation of <<Compute edge function coefficients e0, e1, and e2>> using doubles and so is not included here.

Given the values of the three edge functions, we have our first two opportunities to determine that there is no intersection. First, if the signs of the edge function values differ, then the point is not on the same side of all three edges and therefore is outside the triangle. Second, if the sum of the three edge function values is zero, then the ray is approaching the triangle edge-on, and we report no intersection. (For a closed triangle mesh, the ray will hit a neighboring triangle instead.)

Because the ray starts at the origin, has unit length, and is along the axis, the coordinate value of the intersection point is equal to the intersection’s parametric value. To compute this value, we first need to go ahead and apply the shear transformation to the coordinates of the triangle vertices. Given these values, the barycentric coordinates of the intersection point in the triangle can be used to interpolate them across the triangle. They are given by dividing each edge function value by the sum of edge function values:

Thus, the sum to one.

The interpolated value is given by

where are the coordinates of the three vertices in the ray–triangle intersection coordinate system.

To save the cost of the floating-point division to compute in cases where the final value is out of the range of valid values, the implementation here first computes by interpolating with (in other words, not yet performing the division by ). If the sign of and the sign of the interpolated value are different, then the final value will certainly be negative and thus not a valid intersection.

Along similar lines, the check can be equivalently performed in two ways:

Given a valid intersection, the actual barycentric coordinates and value for the intersection are found.

After a final test on the value that will be discussed in Section 6.8.7, a TriangleIntersection object that represents the intersection can be returned.

TriangleIntersection just records the barycentric coordinates and the value along the ray where the intersection occurred.

The structure of the Triangle::Intersect() method follows the form of earlier intersection test methods.

We will not include the Triangle::IntersectP() method here, as it is just based on calling IntersectTriangle().

The InteractionFromIntersection() method is different than the corresponding methods in the quadrics in that it is a stand-alone function rather than a regular member function. Because a call to it is thus not associated with a specific Triangle instance, it takes a TriangleMesh and the index of a triangle in the mesh as parameters. In the context of its usage in the Intersect() method, this may seem gratuitous—why pass that information as parameters rather than access it directly in a non-static method?

We have designed the interface in this way so that we are able to use this method in pbrt’s GPU rendering path, where the Triangle class is not used. There, the representation of triangles in the scene is abstracted by a ray intersection API and the geometric ray–triangle intersection test is performed using specialized hardware. Given an intersection, it provides the triangle index, a pointer to the mesh that the triangle is a part of, and the barycentric coordinates of the intersection. That information is sufficient to call this method, which then allows us to find the SurfaceInteraction for such intersections using the same code as executes on the CPU.

To generate consistent tangent vectors over triangle meshes, it is necessary to compute the partial derivatives and using the parametric values at the triangle vertices, if provided. Although the partial derivatives are the same at all points on the triangle, the implementation here recomputes them each time an intersection is found. Although this results in redundant computation, the storage savings for large triangle meshes can be significant.

A triangle can be described by the set of points

for some , where and range over the parametric coordinates of the triangle. We also know the three vertex positions , , and the texture coordinates at each vertex. From this it follows that the partial derivatives of must satisfy

In other words, there is a unique affine mapping from the 2D space to points on the triangle. (Such a mapping exists even though the triangle is specified in 3D because the triangle is planar.) To compute expressions for and , we start by computing the differences and , giving the matrix equation

Thus,

Inverting a 22 matrix is straightforward. The inverse of the differences matrix is

This computation is performed by the <<Compute triangle partial derivatives>> fragment, with handling for various additional corner cases.

The triangle’s uv coordinates are found by indexing into the TriangleMesh::uv array, if present. Otherwise, a default parameterization is used. We will not include the fragment that initializes uv here.

In the usual case, the matrix is non-degenerate, and the partial derivatives are computed using Equation (6.7).

However, there are a number of rare additional cases that must be handled. For example, the user may have provided coordinates that specify a degenerate parameterization, such as the same at all three vertices. Alternatively, the computed dpdu and dpdv values may have a degenerate cross product due to rounding error. In such cases we fall back to computing dpdu and dpdv that at least give the correct normal vector.

To compute the intersection point and the parametric coordinates at the hit point, the barycentric interpolation formula is applied to the vertex positions and the coordinates at the vertices. As we will see in Section 6.8.5, this gives a more accurate result for the intersection point than evaluating the parametric ray equation using t.

Unlike with the shapes we have seen so far, it is not necessary to transform the SurfaceInteraction here to rendering space, since the geometric per-vertex values are already in rendering space. Like the disk, the partial derivatives of the triangle’s normal are also both , since it is flat.

Before the SurfaceInteraction is returned, some final details related to its surface normal and shading geometry must be taken care of.

The SurfaceInteraction constructor initializes the geometric normal n as the normalized cross product of dpdu and dpdv. This works well for most shapes, but in the case of triangle meshes it is preferable to rely on an initialization that does not depend on the underlying texture coordinates: it is fairly common to encounter meshes with bad parameterizations that do not preserve the orientation of the mesh, in which case the geometric normal would have an incorrect orientation.

We therefore initialize the geometric normal using the normalized cross product of the edge vectors dp02 and dp12, which results in the same normal up to a potential sign difference that depends on the exact order of triangle vertices (also known as the triangle’s winding order). 3D modeling packages generally try to ensure that triangles in a mesh have consistent winding orders, which makes this approach more robust.

With Triangles, the user can provide normal vectors and tangent vectors at the vertices of the mesh that are interpolated to give normals and tangents at points on the faces of triangles. Shading geometry with interpolated normals can make otherwise faceted triangle meshes appear to be smoother than they geometrically are. If either shading normals or shading tangents have been provided, they are used to initialize the shading geometry in the SurfaceInteraction.

Given the barycentric coordinates of the intersection point, it is easy to compute the shading normal by interpolating among the appropriate vertex normals, if present.

The shading tangent is computed similarly.

The bitangent vector ts is found using the cross product of ns and ss, giving a vector orthogonal to the two of them. Next, ss is overwritten with the cross product of ts and ns; this ensures that the cross product of ss and ts gives ns. Thus, if per-vertex and values are provided and if the interpolated and values are not perfectly orthogonal, will be preserved and will be modified so that the coordinate system is orthogonal.

The code to compute the partial derivatives and of the shading normal is almost identical to the code to compute the partial derivatives and . Therefore, it has been elided from the text here.

6.5.4 Sampling

The uniform area triangle sampling method is based on mapping the provided random sample u to barycentric coordinates that are uniformly distributed over the triangle.

Uniform barycentric sampling is provided via a stand-alone utility function (to be described shortly), which makes it easier to reuse this functionality elsewhere.

As with Triangle::NormalBounds(), the surface normal of the sampled point is affected by the orientation of the shading normal, if present.

The coordinates for the sampled point are also found with barycentric interpolation.

Because barycentric interpolation is linear, it can be shown that if we can find barycentric coordinates that uniformly sample a specific triangle, then those barycentrics can be used to uniformly sample any triangle. To derive the sampling algorithm, we will therefore consider the case of uniformly sampling a unit right triangle. Given a uniform sample in that we would like to map to the triangle, the task can also be considered as finding an area-preserving mapping from the unit square to the unit triangle.

A straightforward approach is suggested by Figure 6.15: the unit square could be folded over onto itself, such that samples that are on the side of the diagonal that places them outside the triangle are reflected across the diagonal to be inside it. While this would provide a valid sampling technique, it is undesirable since it causes samples that were originally far away in to be close together on the triangle. (For example, and in the unit square would both map to the same point in the triangle.) The effect would be that sampling techniques that generate well-distributed uniform samples such as those discussed in Chapter 8 were less effective at reducing error.

A better mapping translates points along the diagonal by a varying amount that brings the two opposite sides of the unit square to the triangle’s diagonal.

The determinant of the Jacobian matrix for this mapping is a constant and therefore this mapping is area preserving and uniformly distributed samples in the unit square are uniform in the triangle. (Recall Section 2.4.1, which presented the mathematics of transforming samples from one domain to the other; there it was shown that if the Jacobian of the transformation is constant, the mapping is area-preserving.)

The usual normalization constraint gives the PDF in terms of the triangle’s surface area.

In order to sample points on spheres with respect to solid angle from a reference point, we derived a specialized sampling method that only sampled from the potentially visible region of the sphere. For the cylinder and disk, we just sampled uniformly by area and rescaled the PDF to account for the change of measure from area to solid angle. It is tempting to do the same for triangles (and, indeed, all three previous editions of this book did so), but going through the work to apply a solid angle sampling approach can lead to much better results.

To see why, consider a simplified form of the reflection integral from the scattering equation, (4.14):

where the BRDF has been replaced with a constant , which corresponds to a diffuse surface. If we consider the case of incident radiance only coming from a triangular light source that emits uniform diffuse radiance , then we can rewrite this integral as

where is a visibility function that is 1 if the ray from in direction hits the light source and 0 if it misses or is occluded by another object. If we sample the triangle uniformly within the solid angle that it subtends from the reference point, we end up with the estimator

where is the subtended solid angle. The constant values have been pulled out, leaving just the two factors in parentheses that vary based on . They are the only source of variance in estimates of the integral.

As an alternative, consider a Monte Carlo estimate of this function where a point has been uniformly sampled on the surface of the triangle. If the triangle’s area is , then the PDF is . Applying the standard Monte Carlo estimator and defining a new visibility function that is between two points, we end up with

where the last factor accounts for the change of variables and where is the angle between the light source’s surface normal and the vector between the two points. The values of the four factors inside the parentheses in this estimator all depend on the choice of .

With area sampling, the factor adds some additional variance, though not too much, since it is between 0 and 1. However, can have unbounded variation over the surface of the triangle, which can lead to high variance in the estimator since the method used to sample does not account for it at all. This variance increases the larger the triangle is and the closer the reference point is to it. Figure 6.16 shows a scene where solid angle sampling significantly reduces error.

The Triangle::Sample() method that takes a reference point therefore samples a point according to solid angle.

Triangles that subtend a very small solid angle as well as ones that cover nearly the whole hemisphere can encounter problems with floating-point accuracy in the following solid angle sampling approach. The sampling method falls back to uniform area sampling in those cases, which does not hurt results in practice: for very small triangles, the various additional factors tend not to vary as much over the triangle’s area. pbrt also samples the BSDF as part of the direct lighting calculation, which is an effective strategy for large triangles, so uniform area sampling is fine in that case as well.

pbrt also includes an approximation to the effect of the factor in its triangle sampling algorithm, which leaves visibility and error in that approximation as the only sources of variance. We will defer discussion of the fragment that handles that, <<Apply warp product sampling for cosine factor at reference point>>, until after we have discussed the uniform solid angle sampling algorithm. For now we will note that it affects the final sampling PDF, which turns out to be the product of the PDF for uniform solid angle sampling of the triangle and a correction factor.

Uniform sampling of the solid angle that a triangle subtends is equivalent to uniformly sampling the spherical triangle that results from its projection on the unit sphere (recall Section 3.8.2). Spherical triangle sampling is implemented in a separate function described shortly, SampleSphericalTriangle(), that returns the barycentric coordinates for the sampled point.

Given the barycentric coordinates, it is simple to compute the sampled point. With that as well as the surface normal, computed by reusing a fragment from the other triangle sampling method, we have everything necessary to return a ShapeSample.

The spherical triangle sampling function takes three triangle vertices v, a reference point p, and a uniform sample u. The value of the PDF for the sampled point is optionally returned via pdf, if it is not nullptr. Figure 6.17 shows the geometric setting.

Given the reference point, it is easy to project the vertices on the unit sphere to find the spherical triangle vertices , , and .

Because the plane containing an edge also passes through the origin, we can compute the plane normal for an edge from to as

and similarly for the other edges. If any of these normals are degenerate, then the triangle has zero area.

Given the pairs of plane normals, AngleBetween() gives the angles between them. In computing these angles, we can take advantage of the fact that the plane normal for the edge between two vertices and is the negation of the plane normal for the edge from to .

The spherical triangle sampling algorithm operates in two stages. The first step uniformly samples a new triangle with area between 0 and the area of the original spherical triangle using the first sample: . This triangle is defined by finding a new vertex along the arc between and such that the resulting triangle has area (see Figure 6.18(a)).

In the second step, a vertex is sampled along the arc between and with sampling density that is relatively lower near and higher near ; the result is a uniform distribution of points inside the spherical triangle (Figure 6.18(b)). (The need for a nonuniform density along the arc can be understood by considering the arcs as sweeps from to : the velocity of a point on the arc increases the farther away from and so the density of sampled points along a given arc must be adjusted accordingly.)

Our implementation makes a small modification to the algorithm as described so far: rather than computing the triangle’s spherical area and then sampling an area uniformly between 0 and , it instead starts by computing its area plus , which we will denote by . Doing so lets us avoid the subtraction of from the sum of the interior angles that is present in the spherical triangle area equation, (3.5). For very small triangles, where , the subtraction of can cause a significant loss of floating-point accuracy. The remainder of the algorithm’s implementation is then adjusted to be based on in order to avoid needing to perform the subtraction.

Given , the sampled area-plus- is easily computed by uniformly sampling between and .

The returned PDF value can be initialized at this point; because the algorithm samples uniformly in the triangle’s solid angle, the probability density function takes the constant value of one over the solid angle the triangle subtends, which is its spherical area. (For that, the value of must be subtracted.)

At this point, we need to determine more values related to the sampled triangle. We have the vertices and , the edge , and the angle all unchanged from the given triangle. The area-plus- of the sampled triangle is known, but we do not have the vertex , the edges or , or the angles or .

To find the vertex , it is sufficient to find the length of the arc . In this case, will do, since . The first step is to apply one of the spherical cosine laws, which gives the equality

Although we do not know , we can apply the definition of ,

to express in terms of quantities that are either known or are :

Defining to simplify notation, we have

The cosine and sine sum identities then give

The only remaining unknowns on the right hand side are the sines and cosines of .

To find and , we can use another spherical cosine law, which gives the equality

It can be simplified in a similar manner to find the equation

The terms in parentheses are all known. We will denote them by and . It is then easy to see that solutions to the equation

are given by

Substituting these into Equation (6.9), taking the solution with a positive cosine, and simplifying gives

which finally has only known values on the right hand side.

The code to compute this cosine follows directly from this solution. In it, we have also applied trigonometric identities to compute and in terms of other sines and cosines.

The arc of the great circle between the two points and can be parameterized by , where is the normalized perpendicular component of with respect to . This vector is given by the GramSchmidt() function introduced earlier, which makes the computation of straightforward. In this case, can then be found using with the Pythagorean identity, since we know that it must be nonnegative.

For the sample points to be uniformly distributed in the spherical triangle, it can be shown that if the edge from to is parameterized using in the same way as was used for the edge from to , then should be sampled as

(The “Further Reading” section has pointers to the details.)

With that, we can compute the final sampled direction . The remaining step is to compute the barycentric coordinates for the sampled direction.

The barycentric coordinates of the corresponding point in the planar triangle can be found using part of a ray–triangle intersection algorithm that finds the barycentrics along the way (Möller and Trumbore 1997). It starts with equating the parametric form of the ray with the barycentric interpolation of the triangle’s vertices ,

expressing this as a matrix equation, and solving the resulting linear system for the barycentrics. The solution is implemented in the following fragment, which includes the result of factoring out various common subexpressions.

The computed barycentrics may be invalid for very small and very large triangles. This happens rarely, but to protect against it, they are clamped to be within the triangle before they are returned.

As noted earlier, uniform solid angle sampling does not account for the incident cosine factor at the reference point. Indeed, there is no known analytic method to do so. However, it is possible to apply a warping function to the uniform samples u that approximately accounts for this factor.

To understand the idea, first note that varies smoothly over the spherical triangle. Because the spherical triangle sampling algorithm that we have just defined maintains a continuous relationship between sample values and points in the triangle, then if we consider the image of the function back in the sampling domain, as would be found by mapping it through the inverse of the spherical triangle sampling algorithm, the function is smoothly varying there as well. (See Figure 6.19.)

It can be shown through simple application of the chain rule that a suitable transformation of uniform sample points can account for the factor. Specifically, if transformed points are distributed according to the distribution of in and then used with the spherical triangle sampling algorithm, then the distribution of directions on the sphere will include the factor.

The true function has no convenient analytic form, but because it is smoothly varying, here we will approximate it with a bilinear function. Each corner of the sampling domain maps to one of the three vertices of the spherical triangle, and so we set the bilinear function’s value at each corner according to the factor computed at the associated triangle vertex.

Sampling a point in the triangle then proceeds by using the initial uniform sample to sample the bilinear distribution and to use the resulting nonuniform point in with the triangle sampling algorithm. (See Figure 6.20.)

Applying the principles of transforming between distributions that were introduced in Section 2.4.1, we can find that the overall PDF of such a sample is given by the product of the PDF for the bilinear sample and the PDF of the spherical triangle sample.

This technique is only applied for reference points on surfaces. For points in scattering media, the surface normal ShapeSampleContext::ns is degenerate and no sample warping is applied.

For the spherical triangle sampling algorithm, the vertex v0 corresponds to the sample , v1 to and (and the line in between), and v2 to . Therefore, the sampling weights at the corners of the domain are computed using the cosine of the direction to the corresponding vertex.

The associated PDF() method is thankfully much simpler than the sampling routine.

It is important that the PDF() method makes exactly the same decisions about which technique is used to sample the triangle as the Sample() method does. This method therefore starts with the same check for very small and very large triangles to determine whether it should fall back to returning the PDF based on uniform area sampling.

If Sample() would have warped the initial uniform random sample to account for the incident factor, it is necessary to incorporate the corresponding change of variables factor in the returned PDF here. To do so, we need to be able to invert the spherical triangle sampling algorithm in order to determine the sample value u that samples a point on the triangle that gives the incident direction wi at the reference point. The InvertSphericalTriangleSample() function performs this computation.

The pair of sample values that give a sampled direction can be found by inverting each of the sampling operations individually. The function that performs this computation starts out with a few reused fragments to compute the angles at the three vertices of the spherical triangle.

Next, it finds the vertex along the arc between and that defines the subtriangle that would have been sampled when sampling . This vertex can be found by computing the intersection of the great circle defined by and and the great circle defined by and ; see Figure 6.21.

Recall from Section 3.8.2 that the great circle passing through two points on the sphere is given by the intersection of the sphere with a plane passing through the origin and those two points. Therefore, we can find the intersection between the two corresponding planes, which is a line. In 3D, the cross product of the plane normals gives this line’s direction. This line intersects the sphere at two points and so it is necessary to choose the one of them that is between and .

Given , it is easy to compute the area of the triangle ; the ratio of that area to the original area gives the first sample value u0. However, it is necessary to be aware of the case where and are nearly coincident; in that case, computation of the angle may have high error, sometimes to the point that the subtriangle seems to have larger area than the original triangle . That case is caught with a dot product test.

Otherwise, the area of the subtriangle is computed using Girard’s theorem.

The first sample value is then easily found given these two areas.

The sampling method for choosing along the arc through and , Equation (6.10), is also easily inverted.