12.6 Light Sampling

Due to the linearity assumption in radiometry, illumination at a point in a scene with multiple light sources can be computed by summing the independent contributions of each light. As we have seen before, however, correctness alone is not always sufficient—if it were, we might have sampled ImageInfiniteLights uniformly with the suggestion that one take thousands of samples per pixel until error has been reduced sufficiently. Especially in scenes with thousands or more independent light sources, considering all of them carries too much of a performance cost.

Fortunately, here, too, is a setting where stochastic sampling can be applied. An unbiased estimator for a sum of terms is given by

where the probability mass function (PMF) for any term where is nonzero and where . This is the discrete analog to the integral Monte Carlo estimator, Equation (2.7). Therefore, we can replace any sum over all the scene’s light sources with a sum over just one or a few of them, where the contributions are weighted by one over the probability of sampling the ones selected.

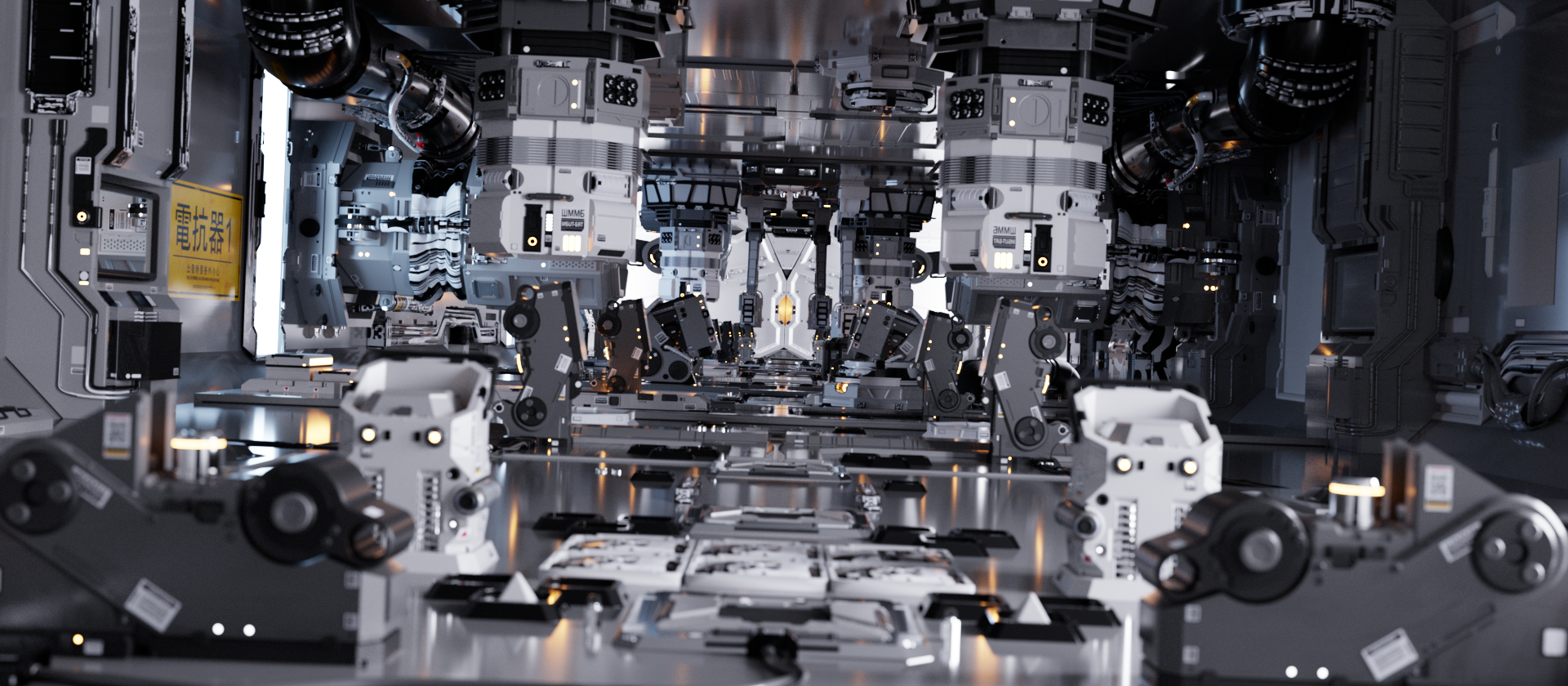

Figure 12.23 is a rendered image of a scene with 8,878 light sources. A few observations motivate some of the light sampling algorithms to come. At any given point in the scene, some lights are facing away and others are occluded. Ideally, such lights would be given a zero sampling probability. Furthermore, often many lights are both far away from a given point and have relatively low power; such lights should have a low probability of being sampled. (Consider, for example, the small yellow lights inset in the machinery.) Of course, even a small and dim light is important to points close to it. Therefore, the most effective light sampling probabilities will vary across the scene depending on position, surface normal, the BSDF, and so forth.

The LightSampler class defines the LightSampler interface for sampling light sources. It is defined in the file base/lightsampler.h. LightSampler implementations can be found in lightsamplers.h and lightsamplers.cpp.

The key LightSampler method is Sample(), which takes a uniform 1D sample and information about a reference point in the form of a LightSampleContext. When sampling is successful, a SampledLight is returned. Otherwise, the optional value is left unset, as may happen if the light sampler is able to determine that no lights illuminate the provided point.

SampledLight just wraps up a light and the discrete probability for it having been sampled.

In order to compute the MIS weighting term when a ray happens to hit a light source, it is necessary to be able to find the value of the probability mass function for sampling a particular light. This task is handled by PMF() method implementations.

LightSamplers must also provide methods to sample a light and return the corresponding probability independent of a specific point being illuminated. These methods are useful for light transport algorithms like bidirectional path tracing that start paths at the light sources.

12.6.1 Uniform Light Sampling

UniformLightSampler is the simplest possible light sampler: it samples all lights with uniform probability. In practice, more sophisticated sampling algorithms are usually much more effective, but this one is easy to implement and provides a useful baseline for comparing light sampling techniques.

As with all light samplers, an array of all the lights in the scene is provided to the constructor; UniformLightSampler makes a copy of them in a member variable.

Since the light sampling probabilities do not depend on the lookup point, we will only include the variants of Sample() and PMF() that do not take a LightSampleContext here. The versions of these methods that do take a context just call these variants. For sampling, a light is chosen by scaling the provided uniform sample by the array size and returning the corresponding light.

Given uniform sampling probabilities, the value of the PMF is always one over the number of lights.

12.6.2 Power Light Sampler

PowerLightSampler sets the probability for sampling each light according to its power. Doing so generally gives better results than sampling uniformly, but the lack of spatial variation in sampling probabilities limits its effectiveness. (We will return to this topic at the end of this section where some comparisons between the two techniques are presented.)

Its constructor also makes a copy of the provided lights but initializes some additional data structures as well.

To efficiently return the value of the PMF for a given light, it is necessary to be able to find the index in the lights array of a given light. Therefore, the constructor also initializes a hash table that maps from Lights to indices.

The PowerLightSampler uses an AliasTable for sampling. It is initialized here with weights based on the emitted power returned by each light’s Phi() method. Note that if the light’s emission distribution is spiky (e.g., as with many fluorescent lights), there is a risk of underestimating its power if a spike is missed. We have not found this to be a problem in practice, however.

Given the alias table, sampling is easy.

To evaluate the PMF, the hash table gives the mapping to an index in the array of lights. In turn, the PMF returned by the alias table for the corresponding entry is the probability of sampling the light.

As with the UniformLightSampler, the Sample() and PMF() methods that do take a LightSampleContext just call the corresponding methods that do not take one.

Sampling lights based on their power usually works well. Figure 12.24 compares both sampling methods using the Zero Day scene. For this scene, noise is visibly reduced when sampling according to power, and mean squared error (MSE) is improved by a factor of 12.4.

Although sampling according to power generally works well, it is not optimal. Like uniform sampling, it is hindered by not taking the geometry of emitters and the relationship between emitters and the receiving point into account. Relatively dim light sources may make the greatest visual contribution in a scene, especially if the bright ones are far away, mostly occluded, or not visible at all.

As an extreme example of this problem with sampling according to power, consider a large triangular light source that emits a small amount of radiance. The triangle’s emitted power can be made arbitrarily large by scaling it to increase its total area. However, at any point in the scene the triangle can do no more than subtend a hemisphere, which limits its effect on the total incident radiance at a point. Sampling by power can devote far too many samples to such lights.

12.6.3 BVH Light Sampling

Varying the light sampling probabilities based on the point being shaded can be an effective light sampling strategy, though if there are more than a handful of lights, some sort of data structure is necessary to do this without having to consider every light at each point being shaded. One widely used approach is to construct a hierarchy over the light sources with the effect of multiple lights aggregated into the higher nodes of the hierarchy. This representation makes it possible to traverse the hierarchy to find the important lights near a given point.

Given a good light hierarchy, it is possible to render scenes with hundreds of thousands or even millions of light sources nearly as efficiently as a scene with just one light. In this section, we will describe the implementation of the BVHLightSampler, which applies bounding volume hierarchies to this task.

Bounding Lights

When bounding volume hierarchies (BVHs) were used for intersection acceleration structures in Section 7.3, it was necessary to abstract away the details of the various types of primitives and underlying shapes that they stored so that the BVHAggregate did not have to be explicitly aware of each of them. There, the primitives’ rendering-space bounding boxes were used for building the hierarchy. Although there were cases where the quality of the acceleration structure might have been improved using shape-specific information (e.g., if the acceleration structure was aware of skinny diagonal triangles with large bounding boxes with respect to the triangle’s area), the BVHAggregate’s implementation was substantially simplified with that approach.

We would like to have a similar decoupling for the BVHLightSampler, though it is less obvious what the right abstraction should be. For example, we might want to know that a spotlight only emits light in a particular cone, so that the sampler does not choose it for points outside the cone. Similarly, we might want to know that a one-sided area light only shines light on one side of a particular plane. For all sorts of lights, knowing their total power would be helpful so that brighter lights can be sampled preferentially to dimmer ones. Of course, power does not tell the whole story, as the light’s spatial extent and relationship to a receiving point affect how much power is potentially received at that point.

The LightBounds structure provides the abstraction used by pbrt for these purposes. It stores a variety of values that make it possible to represent the emission distribution of a variety of types of light.

It is evident that the spatial bounds of the light and its emitted power will be useful quantities, so those are included in LightBounds. However, this representation excludes light sources at infinity such as the DistantLight and the various infinite lights. That limitation is fine, however, since it is unclear how such light sources would be stored in a BVH anyway. (The BVHLightSampler therefore handles these types of lights separately.)

As suggested earlier, bounding a light’s directional emission distribution is important for sampling lights effectively. The representation used here is based on a unit vector that specifies a principal direction for the emitter’s surface normal and two angles that specify its variation. First, specifies the maximum deviation of the emitter’s surface normal from the principal normal direction . Second, specifies the angle beyond up to which there may be emission (see Figure 12.25). Thus, directions that make an angle up to with may receive illumination from a light and those that make a greater angle certainly do not.

While this representation may seem overly specialized for emissive shapes alone, it works well for all of pbrt’s (noninfinite) light types. For example, a point light can be represented with an arbitrary average normal and an angle of for . A spotlight can use the direction it is facing for , its central cone angle for , and the angular width of its falloff region for .

Our implementation stores the cosine of these angles rather than the angles themselves; this representation will make it possible to avoid the expense of evaluating a number of trigonometric functions in the following.

The last part of the emission bounds for a light is a twoSided flag, which indicates whether the direction should be negated to specify a second cone that uses the same pair of angles.

The LightBounds constructor takes corresponding parameters and initializes the member variables. The implementation is straightforward, and so is not included here.

To cluster lights into a hierarchy, we will need to be able to find the bounds that encompass two specified LightBounds objects. This capability is provided by the Union() function.

It is worthwhile to start out by checking for the easy case of one or the other specified LightBounds having zero power. In this case, the other can be returned immediately.

Otherwise, a new average normal direction and updated angles and must be computed. Most of the work involved is handled by the DirectionCone’s Union() method, which takes a pair of cones of directions and returns one that bounds the two of them. The cosine of the new angle is then given by the cosine of the spread angle of that cone.

The value of should be the maximum of the values for the two cones. However, because LightBounds stores the cosines of the angles and because the cosine function is monotonically decreasing over the range of possible values, , we take the minimum cosine of the two angles.

The remainder of the parameter values for the LightBounds constructor are easily computed from the two LightBounds that were provided.

A utility method returns the centroid of the spatial bounds; this value will be handy when building the light BVH.

The Importance() method provides the key LightBounds functionality: it returns a scalar value that estimates the contribution of the light or lights represented in the LightBounds at a given point. If the provided normal is nondegenerate, it is assumed to be the surface normal at the receiving point. Scattering points in participating media pass a zero-valued Normal3f.

It is necessary to make a number of assumptions in order to estimate the amount of light arriving at a point given a LightBounds. While it will be possible to make use of principles such as the received power falling off with the squared distance from the emitter or the incident irradiance at a surface varying according to Lambert’s law, some approximations are inevitable, given the loss of specifics that comes with adopting the LightBounds representation.

Even computing the squared distance for the falloff of received power is challenging if bounds is not degenerate: to which point in bounds should the distance be computed? It may seem that finding the minimum distance from the point p to the bounds would be a safe choice, though this would imply a very small distance for a point close to the bounds and a zero distance for a point inside it. Either of these would lead to a very large factor and potentially high error due to giving too much preference to such a LightBounds. Further, choosing between the two child LightBounds of a node when a point is inside both would be impossible, given infinite weights for each.

Therefore, our first fudge is to compute the distance from the center of the bounding box but further to ensure that the squared distance is not too small with respect to the length of the diagonal of the bounding box. Thus, for larger bounding boxes with corresponding uncertainty about what the actual spatial distribution of emission is, the inverse squared distance factor cannot become too large.

In the following computations, we will need to produce a series of values of the form and . Given the sine and cosine of and , it is possible to do so without evaluating any trigonometric functions. To see how, consider the cosine: implies that and that therefore . We thus start by checking that case and returning if it is true. We are otherwise left with , which can be expressed in terms of the sines and cosines of the two angles using a trigonometric identity, . The case for sine follows analogously.

Two simple lambda functions provide these capabilities. (Only the one for cosine is included in the text, as sinSubClamped follows a similar form.)

There are a number of angles involved in the importance computation. In addition to the ones that specify the directional emission bounds, and , we will start by computing the sine and cosine of , the angle between the principal normal direction and the vector from the center of the light bounds to the reference point (Figure 12.26(a)).

To bound the variation of various angles across the extent of the bounding box, we will also make use of the angle that the bounding box subtends with respect to the reference point (see Figure 12.26(b)). We will denote this angle . The preexisting DirectionCone::BoundSubtendedDirections() function takes care of computing its cosine. Its sine follows directly.

The last angle we will use is the minimum angle between the emitter’s normal and the vector to the reference point. We will denote it by , and it is given by

see Figure 12.27. As with the other angles, we only need its sine and cosine, which can be computed one subtraction at a time.

If this angle is greater than (or, here, if its cosine is less than ), then it is certain that the lights represented by the bounds do not illuminate the reference point and an importance value of 0 can be returned immediately.

The importance value can now be computed. It starts with the product of the power of the lights, the factor that accounts for the cosine at the emitter, and the inverse squared distance.

At a surface, the importance also accounts for a conservative estimate of the incident cosine factor there. We have wi, the unit vector from the reference point to the center of the LightBounds, but would like to conservatively set the importance based on the maximum value of the incident cosine over the entire bounding box. The corresponding minimum angle with the surface normal is given by (see Figure 12.28).

Our implementation of this computation uses the cosSubClamped() lambda function introduced earlier to compute the cosine of the angle directly using the sines and cosines of the two contributing angles.

Bounds for Light Implementations

Given the definition of LightBounds, we will add another method to the Light interface to allow lights to return bounds on their emission.

Lights at infinity return an unset optional value. Here, for example, is the implementation of this method for ImageInfiniteLight. The other infinite lights and the DistantLight do likewise.

The PointLight’s implementation is just a few lines of code, as befitting the simplicity of that type of light source. The spatial bounds are given by the light’s rendering space position and the total emitted power is easily computed following the approach in PointLight::Phi(). Because this light shines in all directions, the average normal direction is arbitrary and is , corresponding to the full sphere of directions.

The SpotLight’s bounding method is a bit more interesting: now the average normal vector is relevant; it is set here to be the light’s direction. The range is set to be the angular width of the inner cone of the light and corresponds to the width of its falloff at the edges. While this falloff does not exactly match the cosine-weighted falloff used in the LightBounds::Importance() method, it is close enough for these purposes.

There is a subtlety in the computation of phi for this light: it is computed as if the light source was an isotropic point source and is not affected by the spotlight’s cone angle, like the computation in SpotLight::Phi() is. To understand the reason for this, consider two spotlights with the same radiant intensity, one with a very narrow cone and one with a wide cone, both illuminating some point in the scene. The total power emitted by the former is much less than the latter, though for a point inside both of their cones, both should be sampled with equal probability—effectively, the cone is accounted for in the light importance function and so should not be included in the phi value supplied here.

We will skip past the implementations of the ProjectionLight and GoniometricLight Bounds() methods, which are along similar lines.

The DiffuseAreaLight’s Bounds() implementation is different than the previous ones. The utility of the Shape::NormalBounds() method may now be better appreciated; the cone of directions that it returns gives the average normal direction and its spread angle . For area lights, , since illumination is emitted in the entire hemisphere around each surface normal.

The phi value is found by integrating over the light’s area. For lights that use an Image for spatially varying emission, the <<Compute average DiffuseAreaLight image channel value>> fragment, not included here, computes its average value. Because LightBounds accounts for whether the emitter is one- or two-sided, it is important not to double the shape’s area if it is two-sided; that factor is already included in the importance computation. (This subtlety is similar to the one for the SpotLight’s phi value.) See Figure 12.29 for an illustration of how this detail makes a difference.

Compactly Bounding Lights

The LightBounds class uses 52 bytes of storage. This is not a problem as far as the total amount of memory consumed for the lights in the scene, but it does affect performance from the amount of space it uses in the cache. For scenes with thousands of lights, multiple instances of the LightBounds will be accessed when traversing the light BVH, and so minimizing its storage requirements improves cache performance and thus overall performance. (This is especially the case on the GPU, since many threads run concurrently on each processor and each will generally follow a different path through the light BVH and thus access different LightBounds instances.)

Therefore, we have also implemented a CompactLightBounds class, which applies a number of techniques to reduce storage requirements for the LightBounds information. It uses just 24 bytes of storage. We use both classes in pbrt: the uncompressed LightBounds is convenient for lights to return from their Bounds() methods and is also a good representation to use when building the light BVH. CompactLightBounds is used solely in the in-memory representation of light BVH nodes, where minimizing size is beneficial to performance.

Its constructor takes both a LightBounds instance and a bounding box allb that must completely bound LightBounds::bounds. This bounding box is used to compute quantized bounding box vertex positions to reduce their storage requirements.

The OctahedralVector class from Section 3.8.3 stores a unit vector in 4 bytes, saving 8 from the Vector3 used in LightBounds. Then, the two cosines and the twoSided flag are packed into another 4 bytes using a bitfield, saving another 8. We have left phi alone, since the various compactions already implemented are sufficient for pbrt’s current requirements.

QuantizeCos() maps the provided value (which is expected to be the cosine of an angle and thus between and 1) to a 15-bit unsigned integer. After being remapped to be in , multiplying by the largest representable 15-bit unsigned integer, , gives a value that spans the valid range.

Note the use of pstd::floor() to round the quantized cosine value down before returning it: this is preferable to, say, rounding to the nearest integer, since it ensures that any quantization error serves to slightly increase the corresponding angle rather than decreasing it. (Decreasing it could lead to inadvertently determining that the light did not illuminate a point that it actually did.)

The bounding box corners are also quantized. Each coordinate of each corner gets 16 bits, all of them stored in the qb member variable. This brings the storage for the bounds down to 12 bytes, from 24 before. Here the quantization is also conservative, rounding down at the lower end of the extent and rounding up at the upper end.

QuantizeBounds() remaps a coordinate value c between min and max to the range , the range of values that an unsigned 16-bit integer can store.

A few convenience methods make the values of various member variables available. For the two quantized cosines, the inverse computation of QuantizeCos() is performed.

The Bounds() method returns the Bounds3f for the CompactLightBounds. It must be passed the same Bounds3f as was originally passed to its constructor for the correct result to be returned.

Finally, CompactLightBounds() also provides an Importance() method. Its implementation also requires that the original Bounds3f be provided so that the Bounds() method can be called. Given the unquantized bounds and cosines made available in appropriately named local variables, the remainder of the implementation can share the same fragments as were used in the implementation of LightBounds::Importance().

Light Bounding Volume Hierarchies

Given a way of bounding lights as well as a compact representation of these bounds, we can turn to the implementation of the BVHLightSampler. This light sampler is the default for most of the integrators in pbrt. Not only is it effective at efficiently sampling among large collections of lights, it even reduces error in simple scenes with just a few lights. Figures 12.30 and 12.31 show two examples.

Its constructor starts by making a copy of the provided array of lights before proceeding to initialize the BVH and additional data structures.

Because the BVH cannot store lights at infinity, the first step is to partition the lights into those that can be stored in the BVH and those that cannot. This is handled by a loop over all the provided lights after which the BVH is constructed.

Lights that are not able to provide a LightBounds are added to the infiniteLights array and are sampled independently of the lights stored in the BVH. As long as they have nonzero emitted power, the rest are added to the bvhLights array, which is used during BVH construction. Along the way, a bounding box that encompasses all the BVH lights’ bounding boxes is maintained in allLightBounds; this is the bounding box that will be passed to the CompactLightBounds for quantizing the spatial bounds of individual lights.

The light BVH is represented using an instance of the LightBVHNode structure for each tree node, both interior and leaf. It uses a total of 28 bytes of storage, adding just 4 to the 24 used by CompactLightBounds, though its declaration specifies 32-byte alignment, ensuring that 2 of them fit neatly into a typical 64-byte cache line on a CPU, and 4 of them fit into a 128-byte GPU cache line.

Naturally, each LightBVHNode stores the CompactLightBounds for either a single light or a collection of them. Like the BVHAggregate’s BVH, the light BVH is laid out in memory so that the first child of each interior node is immediately after it. Therefore, it is only necessary to store information about the second child’s location in the LightBVHNode. The BVHLightSampler stores all nodes in a contiguous array, so an index suffices; a full pointer is unnecessary.

Two object-creation methods return a LightBVHNode of the specified type.

The buildBVH() method constructs the BVH by recursively partitioning the lights until it reaches a single light, at which point a leaf node is constructed. Its implementation closely follows the approach implemented in the BVHAggregate::buildRecursive() method: along each dimension, the light bounds are assigned to a fixed number of buckets according to their centroids. Next, a cost model is evaluated for splitting the lights at each bucket boundary. The minimum cost split is chosen and the lights are partitioned into two sets, each one passed to a recursive invocation of buildBVH().

Because these two methods are so similar, here we will only include the fragments where the BVHLightSampler substantially diverges—in how nodes are initialized and in the cost model used to evaluate candidate splits.

When this method is called with a range corresponding to a single light, a leaf node is initialized and the recursion terminates. A CompactLightBounds is created using the bounding box of all lights’ bounds to initialize its quantized bounding box coordinates and the BVH tree node can be added to the nodes array.

In order to implement the PMF() method, it is necessary to follow a path through the BVH from the root down to the leaf node for the given light. We encode these paths using bit trails, integers where each bit encodes which of the two child nodes should be visited at each level of the tree in order to reach the light’s leaf node. The lowest bit indicates which child should be visited after the root node, and so forth. These values are computed while the BVH is built and stored in a hash table that uses Lights as keys.

When there are multiple lights to consider, the EvaluateCost() method is called to evaluate the cost model for the two LightBounds for each split candidate. In addition to the LightBounds for which to compute the cost, it takes the bounding box of all the lights passed to the current invocation of buildBVH() as well as the dimension in which the split is being performed.

The principal surface normal direction and the angles and that are stored in LightBounds are worthwhile to include in the light BVH cost function. Doing so can encourage partitions of primitives into groups that emit light in different directions, which can be helpful for culling groups of lights that do not illuminate a given receiving point. To compute these costs, pbrt uses a weighted measure of the solid angle of directions that the direction bounds subtend. A weight of 1 is used for all directions inside the center cone up to and then the remainder of directions up to are cosine-weighted, following the importance computation earlier. (See Figure 12.32.) Integrating over the relevant directions gives us the direction bounds measure,

The first term integrates to and the second has a simple analytic form that is evaluated in the second term of M_omega’s initializer below.

Three other factors go into the full cost estimate:

- The power estimate phi: in general, the higher the power of the lights in a LightBounds, the more important it is to minimize factors like the spatial and direction bounds.

- A regularization factor Kr that discourages long and thin bounding boxes.

- The surface area of the LightBounds’s bounding box.

The use of surface area in the cost metric deserves note: with the BVHAggregate, the surface area heuristic was grounded in geometric probability, as the surface area of a convex object is proportional to the probability of a random ray intersecting it. In this case, no rays are being traced. Arguably, minimizing the volume of the bounds would be a more appropriate approach in this case. In practice, the surface area seems to be more effective—one reason is that it penalizes bounding boxes that span a large extent in two dimensions but little or none in the third. Such bounding boxes are undesirable as they may subtend large solid angles, adding more uncertainty to importance estimates.

Once the lights have been partitioned, two fragments take care of recursively initializing the child nodes and then initializing the interior node. The first step is to take a spot in the nodes array for the interior node; this spot must be claimed before the children are initialized in order to ensure that the first child is the successor of the interior node in the array. Two recursive calls to buildBVH() then initialize the children. The main thing to note in them is the maintenance of the bitTrail value passed down into each one. For the first child, the corresponding bit should be set to zero. bitTrail is zero-initialized in the initial call to buildBVH(), so it has this value already and there is nothing to do. For the second call, the bit for the current tree depth is set to 1.

The interior node can be initialized after the children have been. Its light bounds are given by the union of its children’s, which allows initializing a CompactLightBounds and then the LightBVHNode itself.

Given the BVH, we can now implement the Sample() method, which samples a light given a reference point in a LightSampleContext.

The first choice to make is whether an infinite light should be sampled or whether the light BVH should be used to choose a noninfinite light. The BVHLightSampler gives equal probability to sampling each infinite light and to sampling the BVH, from which the probability of sampling an infinite light follows directly.

If an infinite light is to be sampled, then the random sample u is rescaled to provide a new uniform random sample that is used to index into the infiniteLights array.

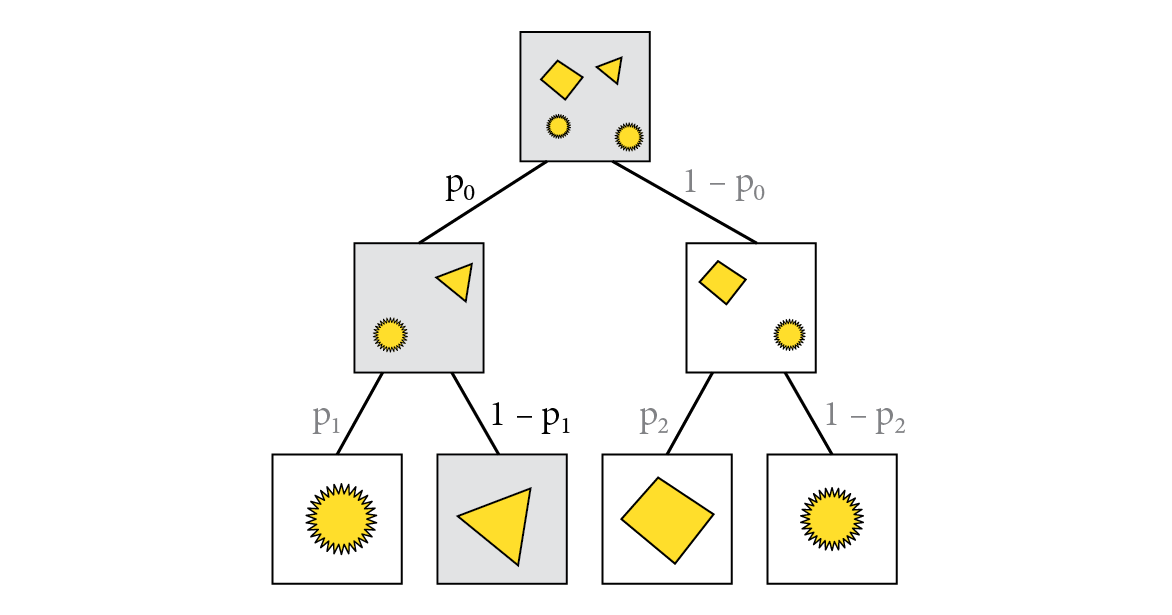

Otherwise a light is sampled by traversing the BVH. At each interior node, probabilities are found for sampling each of the two children using importance values returned by the LightBounds for the reference point. A child node is then randomly chosen according to these probabilities. In the end, the probability of choosing a leaf node is equal to the product of probabilities along the path from the root to the leaf (see Figure 12.33). With this traversal scheme, there is no need to maintain a stack of nodes to be processed as the BVHAggregate does—a single path is taken down the tree from the root to a leaf.

A few values will be handy in the following traversal of the BVH. Among them are the uniform sample u, which is remapped to a new uniform random sample in . pmf starts out with the probability of sampling the BVH in the first place; each time a child node of the tree is randomly sampled, it will be multiplied by the discrete probability of that sampling choice so that in the end it stores the complete probability for sampling the light.

At each interior node, a child node is randomly sampled. Given a leaf node, we have reached the sampled light.

The first step at an interior node is to compute the importance values for the two child nodes. It may be the case that both of them are 0, indicating that neither child illuminates the reference point. That we may end up in this situation may be surprising: in that case, why would we have chosen to visit this node in the first place? This state of affairs is a natural consequence of the accuracy of light bounds improving on the way down the tree, which makes it possible for them to better differentiate regions that their respective subtrees do and do not illuminate.

Given at least one nonzero importance value, SampleDiscrete() takes care of choosing a child node. The sampling PMF it returns is incorporated into the running pmf product. We further use its capability for remapping the sample u to a new uniform sample in , which allows the reuse of the u variable in subsequent loop iterations.

When a leaf node is reached, we have found a light. The light should only be returned if it has a nonzero importance value, however: if the importance is zero, then it is better to return no light than to return one and cause the caller to go through some additional work to sample it before finding that it has no contribution. Most of the time, we have already determined that the node’s light bounds have a nonzero importance value by dint of sampling the node while traversing the BVH in the first place. It is thus only in the case of a single-node BVH with a single light stored in it that this test must be performed here.

Computing the PMF for sampling a specified light follows a set of computations similar to those of the sampling method: if the light is an infinite light, the infinite light sampling probability is returned and otherwise the BVH is traversed to compute the light’s sampling probability. In this case, BVH traversal is not stochastic, but is specified by the bit trail for the given light, which encodes the path to the leaf node that stores it.

If the given light is not in the bit trail hash table, then it is not stored in the BVH and therefore must be an infinite light. The probability of sampling it is one over the total number of infinite lights plus one if there is a light BVH.

A number of values will be useful as the tree is traversed, including the bit trail that points the way to the correct leaf, the PMF of the path taken so far, and the index of the current node being visited, starting here at the root.

For a light that is stored in the BVH, the probability of sampling it is again computed as the product of each discrete probability of sampling the child node that leads to its leaf node.

The lowest bit of bitTrail encodes which of the two children of the node is visited on a path down to the light’s leaf node. In turn, it is possible to compute the probability of sampling that node given the two child nodes’ importance values.

The low-order bit of bitTrail also points us to which node to visit next on the way down the tree. After nodeIndex is updated, bitTrail is shifted right by one bit so that the low-order bit encodes the choice to make at the next level of the tree.

The basic Sample() and PMF() methods for when a reference point is not specified sample all the lights uniformly and so are not included here, as they parallel the implementations in the UniformLightSampler.