10.4 Image Texture

Image textures store 2D arrays of point-sampled values of a texture function. They use these samples to reconstruct a continuous image function that can be evaluated at an arbitrary position. These sample values are often called texels, since they are similar to pixels in an image but are used in the context of a texture. Image textures are the most widely used type of texture in computer graphics; digital photographs, scanned artwork, images created with image-editing programs, and images generated by renderers are all extremely useful sources of data for this particular texture representation (Figure 10.14).

As with most of the other types of texture, pbrt provides both Float and spectral variants. Both implementations inherit from ImageTextureBase, which provides some common functionality.

In the following, we will present the implementation of SpectrumImageTexture; FloatImageTexture is analogous and does not add anything new.

10.4.1 Texture Memory Management

The caller of SpectrumImageTexture’s constructor provides a texture mapping function, the filename of an image, various parameters that control the filtering of the image map, how boundary conditions are managed, and how colors are converted to spectral samples. All the necessary initialization is handled by ImageTextureBase.

As was discussed in Section 4.6.6, RGB colors are transformed into spectra differently depending on whether or not they represent reflectances. The spectrumType records what type of RGB a texture represents.

The contents of the image file are used to create an instance of the MIPMap class that stores the texels in memory and handles the details of reconstruction and filtering to reduce aliasing.

A floating-point scale can be specified with each texture; it is applied to the values returned by the Evaluate() method. Further, a true value for the invert parameter causes the texture value to be subtracted from 1 before it is returned. While the same functionality can be achieved with scale and mix textures, it is easy to also provide that functionality directly in the texture here. Doing so can lead to more efficient texture evaluation on GPUs, as is discussed further in Section 15.3.9.

Each MIP map may require a meaningful amount of memory, and a complex scene may have thousands of image textures. Because an on-disk image may be reused for multiple textures in a scene, pbrt maintains a table of MIP maps that have been loaded so far so that they are only loaded into memory once even if they are used in more than one image texture.

pbrt loads textures in parallel after the scene description has been parsed; doing so reduces startup time before rendering begins. Therefore, a mutex is used here to ensure that only one thread accesses the texture cache at a time. Note that if the MIPMap is not found in the cache, the lock is released before it is read so that other threads can access the cache in the meantime.

The texture cache itself is managed with a std::map.

TexInfo is a simple structure that acts as a key for the texture cache std::map. It holds all the specifics that must match for a MIPMap to be reused in another image texture.

The TexInfo constructor, not included here, sets its member variables with provided values. Its only other method is a comparison operator, which is required by std::map.

If the texture has not yet been loaded, a call to CreateFromFile() yields a MIPMap for it. If the file is not found or there is an error reading it, pbrt exits with an error message, so a nullptr return value does not need to be handled here.

10.4.2 Image Texture Evaluation

Before describing the MIPMap implementation, we will discuss the SpectrumImageTexture Evaluate() method.

It is easy to compute the texture coordinates and their derivatives for filtering with the TextureMapping2D’s Map() method. However, the coordinate must be flipped, because pbrt’s Image class (and in turn, MIPMap, which is based on it) defines to be the upper left corner of the image, while image textures have at the lower left. (These are the typical conventions for indexing these entities in computer graphics.)

The MIPMap’s Filter() method provides the filtered value of the image texture over the specified region; any specified scale or inversion is easily applied to the value it returns. A call to ClampZero() here ensures that no negative values are returned after inversion.

As discussed in Section 4.6.2, an RGB color space is necessary in order to interpret the meaning of an RGB color value. Normally, the code that reads image file formats from disk returns an RGBCcolorSpace with the read image. Most RGB image formats default to sRGB, and some allow specifying an alternative color space. (For example, OpenEXR allows specifying the primaries of an arbitrary RGB color space in the image file’s metadata.) A color space and the value of spectrumType make it possible to create the appropriate type RGB spectrum, and in turn, its Spectrum::Sample() can be called to get the SampledSpectrum that will be returned.

If the MIPMap has no associated color space, the image is assumed to have the same value in all channels and a constant value is returned for all the spectrum samples. This assumption is verified by a DCHECK() call in non-optimized builds.

10.4.3 MIP Maps

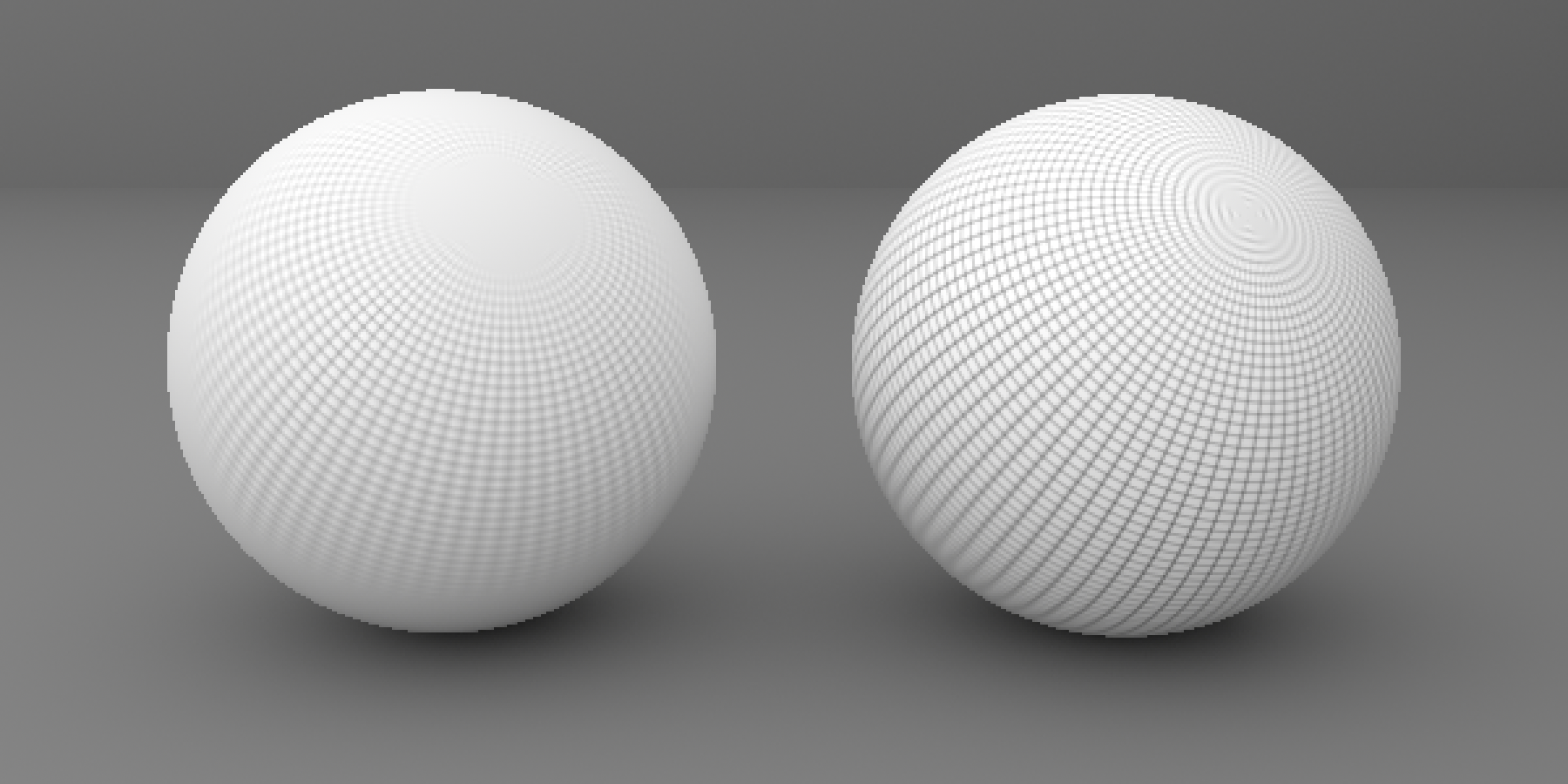

As always, if the image texture function has higher frequency detail than can be represented by the texture sampling rate, aliasing will be present in the final image. Any frequencies higher than the Nyquist limit must be removed by prefiltering before the function is evaluated. Figure 10.15 shows the basic problem we face: an image texture has texels that are samples of some image function at a fixed frequency. The filter region for the lookup is given by its center point and offsets to the estimated texture coordinate locations for the adjacent image samples. Because these offsets are estimates of the texture sampling rate, we must remove any frequencies higher than twice the distance to the adjacent samples in order to satisfy the Nyquist criterion.

The texture sampling and reconstruction process has a few key differences from the image sampling process discussed in Chapter 8. These differences make it possible to address the antialiasing problem with more effective and less computationally expensive techniques. For example, here it is inexpensive to get the value of a sample—only an array lookup is necessary (as opposed to having to trace a number of rays to compute radiance). Further, because the texture image function is fully defined by the set of samples and there is no mystery about what its highest frequency could be, there is no uncertainty related to the function’s behavior between samples. These differences make it possible to remove detail from the texture before sampling, thus eliminating aliasing.

However, the texture sampling rate will typically change from pixel to pixel. The sampling rate is determined by scene geometry and its orientation, the texture coordinate mapping function, and the camera projection and image sampling rate. Because the texture sampling rate is not fixed, texture filtering algorithms need to be able to filter over arbitrary regions of texture samples efficiently.

The MIPMap class implements a number of methods for texture filtering with spatially varying filter widths. It can be found in the files util/mipmap.h and util/mipmap.cpp. The filtering algorithms it offers range from simple point sampling to bilinear interpolation and trilinear interpolation, which is fast and easy to implement and was widely used for texture filtering in early graphics hardware, to elliptically weighted averaging, which is more complex but returns extremely high-quality results. Figure 10.16 compares the result of texture filtering using trilinear interpolation and the EWA algorithm.

If an RGB image is provided to the MIPMap constructor, its channels should be stored in R, G, B order in memory; for efficiency, the following code assumes that this is the case. All the code that currently uses MIPMaps in pbrt ensures that this is so.

To limit the potential number of texels that need to be accessed, these filtering methods use an image pyramid of increasingly lower resolution prefiltered versions of the original image to accelerate their operation. The original image texels are at the bottom level of the pyramid, and the image at each level is half the resolution of the previous level, up to the top level, which has a single texel representing the average of all the texels in the original image. This collection of images needs at most more memory than storing the most detailed level alone and can be used to quickly find filtered values over large regions of the original image. The basic idea behind the pyramid is that if a large area of texels needs to be filtered, a reasonable approximation is to use a higher level of the pyramid and do the filtering over the same area there, accessing many fewer texels.

The MIPMap’s image pyramid is represented by a vector of Images. See Section B.5 for the implementation of Image and Section B.5.5 for its GeneratePyramid() method, which generates image pyramids.

The choice of filtering algorithm and a parameter used by the EWA method are represented by MIPMapFilterOptions.

A few simple utility methods return information about the image pyramid and the MIPMap’s color space.

Given the image pyramid, we will define some utility MIPMap methods that retrieve the texel value at a specified pyramid level and discrete integer pixel coordinates. For the RGB variant, there is an implicit assumption that the image channels are laid out in R, G, B (and maybe A) order.

The Float specialization of Texel(), not included here, is analogous.

10.4.4 Image Map Filtering

The MIPMap Filter() method returns a filtered image function value at the provided coordinates. It takes two derivatives that give the change in with respect to image pixel samples.

The EWA filtering technique to be described shortly uses both derivatives of to compute an anisotropic filter—one that filters by different amounts in the different dimensions. The other three use an isotropic filter that filters both equally. The isotropic filters are more computationally efficient than the anisotropic filter, though they do not give results that are as good. For them, only a single value is needed to specify the width of the filter. The width here is conservatively chosen to avoid aliasing in both the and directions, though this choice means that textures viewed at an oblique angle will appear blurry, since the required sampling rate in one direction will be very different from the sampling rate along the other in this case.

Because filtering over many texels for wide filter widths would be inefficient, this method chooses a MIP map level from the pyramid such that the filter region at that level would cover four texels at that level. Figure 10.17 illustrates this idea.

Since the resolutions of the levels of the pyramid are all powers of two, the resolution of level is . Therefore, to find the level with a texel spacing width requires solving

for . In general, this will be a floating-point value between two MIP map levels. Values of greater than the number of pyramid levels correspond to a filter width wider than the image, in which case the single pixel at the top level is returned.

For a point-sampled texture lookup, it is only necessary to convert the continuous texture coordinates over to discrete coordinates over the image resolution and to retrieve the appropriate texel value via the MIPMap’s Texel() method.

Bilinear filtering, which is equivalent to filtering using a triangle filter, is easily implemented via a call to Bilerp().

Bilinear interpolation is provided in a separate method so that it can also be used for trilinear filtering.

As shown by Figure 10.17, applying a triangle filter to the four texels around the sample point will either filter over too small a region or too large a region (except for very carefully selected filter widths). Therefore, the Trilinear filtering option applies the triangle filter at both of these levels and blends between them according to how close level is to each of them. This helps hide the transitions from one MIP map level to the next at nearby pixels in the final image. While applying a triangle filter to four texels at two levels in this manner does not generally give exactly the same result as applying a triangle filter to the original pixels, the difference is not too bad in practice, and the efficiency of this approach is worth this penalty. In any case, the following elliptically weighted average filtering approach should be used when texture quality is important.

The elliptically weighted average (EWA) algorithm fits an ellipse to the two differential vectors in texture space and then filters the texture with a Gaussian filter function (Figure 10.18). It is widely regarded as one of the best texture filtering algorithms in graphics and has been carefully derived from the basic principles of sampling theory. Unlike the triangle filter, it can filter over arbitrarily oriented regions of the texture, with different filter extents in different directions. The quality of its results is improved by it being an anisotropic filter, since it can adapt to different sampling rates along the two image axes.

We will not show the full derivation of this filter here, although we do note that it is distinguished by being a unified resampling filter: it simultaneously computes the result of a Gaussian filtered texture function convolved with a Gaussian reconstruction filter in image space. This is in contrast to many other texture filtering methods that ignore the effect of the image-space filter or equivalently assume that it is a box. Even if a Gaussian is not being used for filtering the samples for the image being rendered, taking some account of the spatial variation of the image filter improves the results, assuming that the filter being used is somewhat similar in shape to the Gaussian, as the Mitchell and windowed sinc filters are.

The screen-space partial derivatives of the texture coordinates define the ellipse. The lookup method starts out by determining which of the two axes is the longer of the two, swapping them if needed so that dst0 is the longer vector. The length of the shorter vector will be used to select a MIP map level.

Next the ratio of the length of the longer vector to the length of the shorter one is considered. A large ratio indicates a very long and skinny ellipse. Because this method filters texels from a MIP map level chosen based on the length of the shorter differential vector, a large ratio means that a large number of texels need to be filtered. To avoid this expense (and to ensure that any EWA lookup takes a bounded amount of time), the length of the shorter vector may be increased to limit this ratio. The result may be an increase in blurring, although this effect usually is not noticeable in practice.

Like the triangle filter, the EWA filter uses the image pyramid to reduce the number of texels to be filtered for a particular texture lookup, choosing a MIP map level based on the length of the shorter vector. Given the limited ratio from the clamping above, the total number of texels used is thus bounded. Given the length of the shorter vector, the computation to find the appropriate pyramid level is the same as was used for the triangle filter. Similarly, the implementation here blends between the filtered results at the two levels around the computed level of detail, again to reduce artifacts from transitions from one level to another.

The MIPMap::EWA() method actually applies the filter at a particular level.

This method first converts from texture coordinates in to coordinates and differentials in terms of the resolution of the chosen MIP map level. It also subtracts from the continuous position coordinate to align the sample point with the discrete texel coordinates, as was done in MIPMap::Bilerp().

It next computes the coefficients of the implicit equation for the ellipse centered at the origin that is defined by the vectors (ds0,dt0) and (ds1,dt1). Placing the ellipse at the origin rather than at simplifies the implicit equation and the computation of its coefficients and can be easily corrected for when the equation is evaluated later. The general form of the implicit equation for all points inside such an ellipse is

although it is more computationally efficient to divide through by and express this as

We will not derive the equations that give the values of the coefficients, although the interested reader can easily verify their correctness.

The next step is to find the axis-aligned bounding box in discrete integer texel coordinates of the texels that are potentially inside the ellipse. The EWA algorithm loops over all of these candidate texels, filtering the contributions of those that are in fact inside the ellipse. The bounding box is found by determining the minimum and maximum values that the ellipse takes in the and directions. These extrema can be calculated by finding the partial derivatives and , finding their solutions for and , and adding the offset to the ellipse center. For brevity, we will not include the derivation for these expressions here.

Now that the bounding box is known, the EWA algorithm loops over the texels, transforming each one to the coordinate system where the texture lookup point is at the origin with a translation. It then evaluates the ellipse equation to see if the texel is inside the ellipse (Figure 10.19) and computes the filter weight for the texel if so. The final filtered value returned is a weighted sum over texels inside the ellipse, where is the Gaussian filter function:

A nice feature of the implicit equation is that its value at a particular texel is the squared ratio of the distance from the center of the ellipse to the texel to the distance from the center of the ellipse to the ellipse boundary along the line through that texel (Figure 10.19). This value can be used to index into a precomputed lookup table of Gaussian filter function values.

The lookup table is precomputed and available as a constant array. Similar to the GaussianFilter used for image reconstruction, the filter function is offset so that it goes to zero at the end of its extent rather than having an abrupt step. It is

was used for the table in pbrt. Because the table is indexed with squared distances from the filter center , each entry stores a value , rather than .